Deep Research on Dify

This workflow reproduces the core functionalities of Deep Research built on the Dify platform. It enables the generation of structured research reports exceeding ten thousand characters within 5 minutes, through multi-source retrieval integrating local knowledge bases and web search, and multi-model collaboration. The system adopts a modular design, allowing flexible replacement of underlying models and data sources.

📖 Overview

This workflow reproduces the core functionalities of Deep Research built on the Dify platform. It enables the generation of structured research reports exceeding ten thousand characters within 5 minutes, through multi-source retrieval integrating local knowledge bases and web search, and multi-model collaboration. The system adopts a modular design, allowing flexible replacement of underlying models and data sources.

✨ Core Features

- Smart Topic Analysis Multi-level topic decomposition supporting 4-dimensional deep analysis using the Gemini 2.0 Flash model

- Hybrid Search Engine Multi-channel retrieval from local knowledge bases and Wikipedia/Google/Bing APIs

- Dynamic Rhythm Control Adopts a 2>1 model collaboration architecture, optimizing processing rhythm through conditional branching and conversation round tags

- Efficient Report Generation Paragraph-level content generation function integrating models like deepseek-r1-distill, supporting structured output in Markdown format

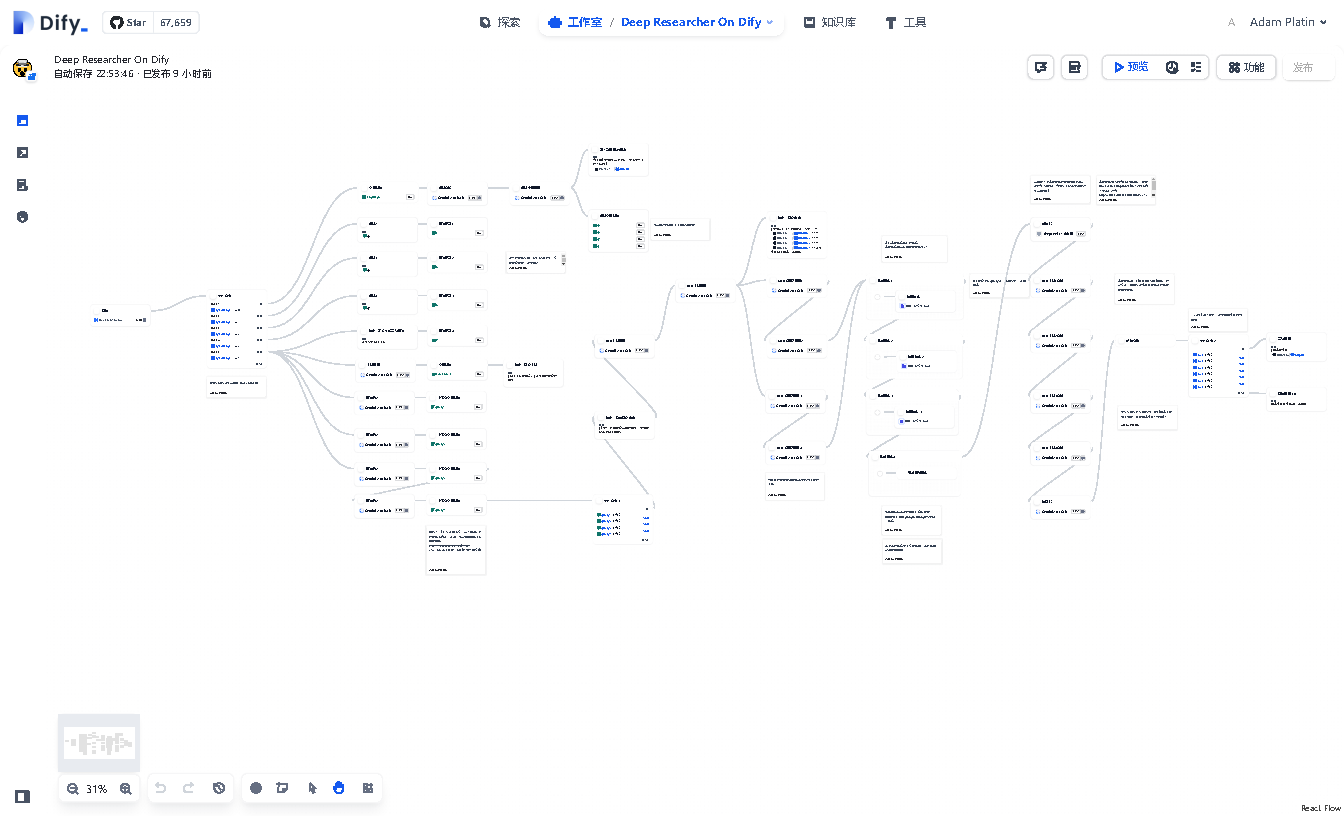

🛠️ Technical Architecture

graph TD A[User Question] --> B{Topic Analysis} B --> F[Question Generation] F --> H[User Answer] H --> C B --> I[Topic Analysis] I --> C{Secondary Topic Extraction} C --> D[Hybrid Iterative Search Engine] D --> E[Multi-Model Collaboration Generation] E --> G[Structured Report] style B fill:#4CAF50,stroke:#388E3C style D fill:#2196F3,stroke:#1565C0

⚠️ Notes

Performance Optimization Suggestions

Although the workflow supports all models in principle, using local models under high request load may cause Timeout Errors in the LLM node. In this case, consider switching to a cloud API service or adjusting the timeout duration in Dify's configuration file. For free Google API users, you can insert a local model node to limit RPM (Requests Per Minute) (Google's default limit is 15 RPM, and sending a large number of requests in a short time will cause errors).

To Do List

·Optimize the processing flow logic and adjust the trade-off between RPM and processing time ·Fix the issue where multiple subtitles are occasionally displayed in the answer ·Perform a major refactoring of the workflow to implement a Q&A system that adapts to the complexity of user questions

License

LGPL3.0 License